GPU Storage Cluster

Enterprise still doesn’t have its data in any of the models out there. And if they want to do it, Storage is a big part of it. The storage pieces is an extreme part of it. You need to get it into a small footprint at the most and organize your data in a way you can bring it for training, do checkpoints because GPUs fail a lot - I mean the entire building blocks.

The problem of whether to do it in the cloud or onpremise still persists but cost remains the determining factor, and as such most find it more economical to build their own, but unfortunately their data centers ain’t up to the task. They have challenges with power, storage, liquid cooling etc. - which brings us further down to the right skillsets. And then the CIOs are more pressured to find the most cost effective vendor to partner with to deliver this. It’s why players like Vast Data, DDN and Weka are beginning to take market share in this highly competitive space.

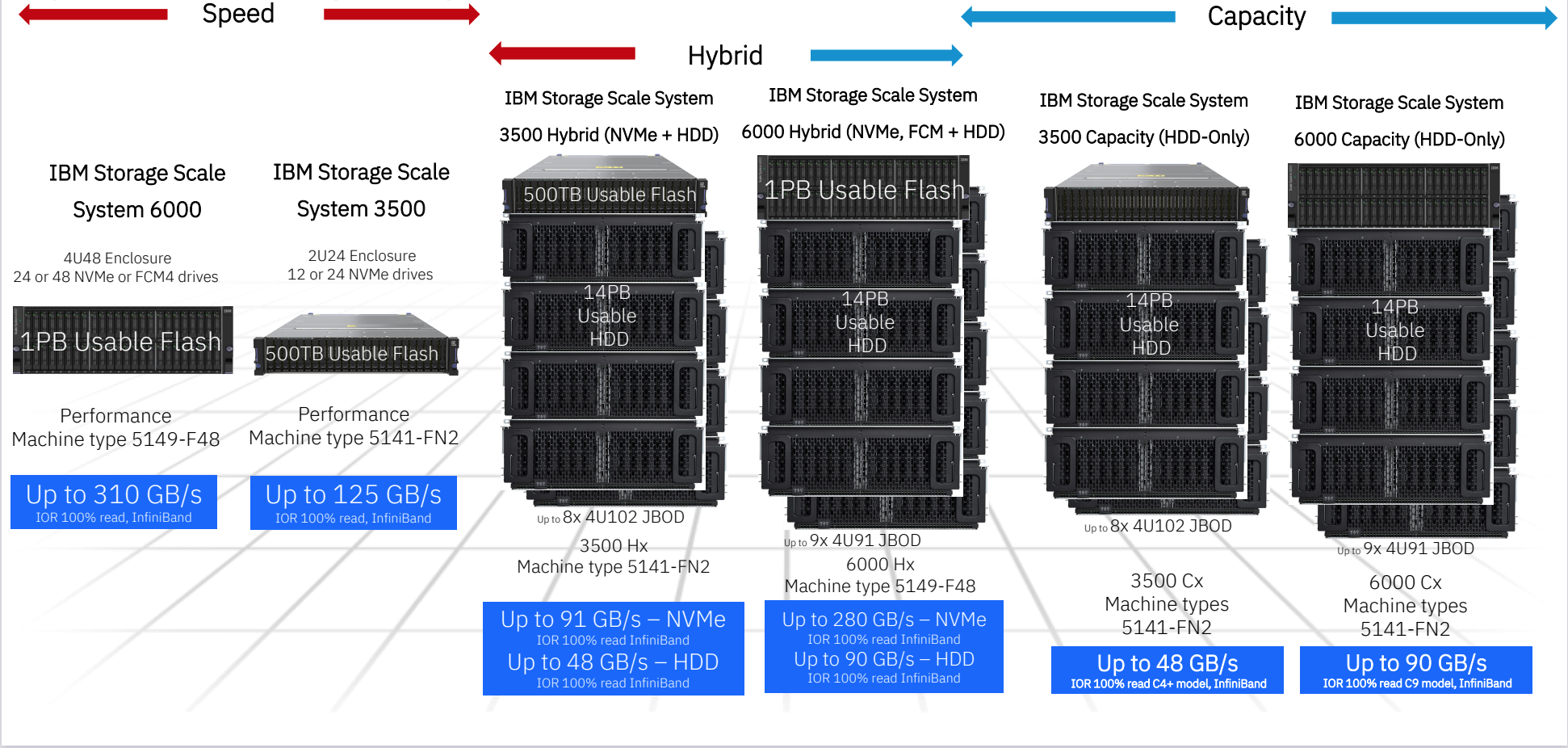

Scaling storage is about reliability and resiliency and low latency. The IBM Storage Scale System 6000 benchmarked with NVIDIA GPU-direct superpods producing 310GB/s throughput for reads of 155GB/s on writes. see the comps below.

Searching a AI Cluster

Computer vision is massive, so is the importance of RAG for finding stuff and getting all that data ingested. To build this, people are building vector databases, copying files out of storage in these vector databases doing all sort of vectorization using GPUs. I’m not sure how fast this ops is. Instead of copying data all around just because you want to vectorize, why not do all that work right in the Storage and do the vectorization as data changes - and that’s how you achieve scale at RAG layer for HA search, if you don’t want to get stuck at PoCs.

Considering that 82% of enterprises say data quality is a barrier on their data integration projects, with such CAPEX spend you achieve lower TCO of up to 70% per TB for ultimate efficiency of your business operations - assuming you’re working with the right team.

We built our AI storage cluster on IBM Storage Scale 6000 on IBM hybrid cloud on 100TB storage capacity for our market intelligence business servicing 25+ clients needs across oil & gas, healthcare, F&B and real estate.

Need a storage cluster for your AI project? Engage us at cloud@huntrecht.com